Uncertainty, the Black Box, and the Leadership We Bring to AI

There’s a strange truth most people won’t admit: We’re using tools we don’t fully understand, and they’re shaping decisions we can’t always explain. AI, especially in its generative and predictive forms, functions like a black box. We feed it input. We receive output. Most of the time, it’s good enough.

We know it works. We know it’s fallible (enter 'probability', enter 'ego'…). We understand it to a degree, but not in the way we might understand a traditional system or workflow. And yet we increasingly use it because of the value.

This introduces a quiet kind of uncertainty. Not just about the technology, but about our relationship to it. That relationship reveals something essential about how we lead.

The Illusion of Understanding

AI often gives the impression of being more explainable than it is. We can adjust the prompts, analyze the output, even “tune” its tone. But when we treat its answers as fact rather than possibility, we stop leading. We start deferring.

The black box itself isn’t necessarily the issue. What matters is what we bring to it. How we project confidence, seek shortcuts, or avoid the discomfort of uncertainty.

Are we using AI to sharpen our thinking, or to bypass the hard parts? Are we including it in the creative process, or offloading responsibility to it?

The black box becomes a mirror. It shows us how we handle ambiguity.

The Quantum Perspective: AI Isn’t the Problem

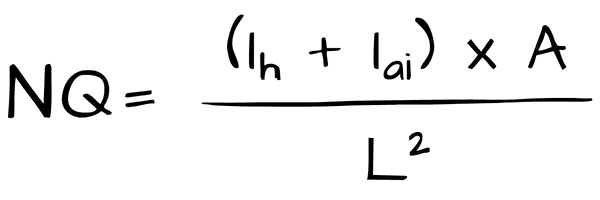

In the systems intelligence formula:

AI, or I_ai, can be a multiplier of insight and capacity.

But only if it’s integrated into the system with clarity and intention (see: A).

When we over-rely on it, we dull our human insight (I_h) and risk losing what makes our leadership distinct.

When we underuse it, we miss opportunities to reduce latency (L) and streamline complexity.

And when we treat its output as final, we often disrupt alignment (A). For example, when not everyone agrees on what’s valid, meaningful, or even real.

So it’s not about the tool. It’s about the relationship.

The smartest systems know how to collaborate with AI, not just adopt it.

Uncertainty as a Leadership Space

The uncertainty in AI isn’t a defect. It’s an invitation. A well-designed black box creates space for human leadership to interpret, to discern, to decide what matters. When used well, AI offers more than speed. It helps us:

Surface patterns we might not have seen

Explore new language and frames

Stay in motion, even when answers aren’t final

But this only works if we remain actively engaged. If we treat AI as a co-pilot rather than an autopilot. And if we are willing to lead inside the ambiguity, not just react to it.

What It Means for Leadership Now

Leaders don’t need to become AI experts. But they do need to get better at recognizing how their teams relate to uncertainty.

Ask yourself:

Is the team using AI to expand its thinking or to settle for fast answers?

Are we reviewing outputs with discernment, or defaulting to what sounds best?

Are we creating a culture that invites deeper signal, or one that avoids ambiguity?

Because the real shift isn’t just technological. It’s relational. AI doesn’t eliminate uncertainty. It relocates it. The question is, who owns that space? In systems that function well, leadership lives there. It holds that uncertainty long enough for intelligence to emerge.

Final Thought

We don’t need to eliminate the black box. We need to become more thoughtful about how we show up around it. The question isn’t, “Does AI work?” It’s, “Are we showing up in a way that makes the most of what it offers?” That’s where value is created. That’s where leadership lives.